Debugging¶

-

magnet.debug.overfit(trainer, data, batch_size, epochs=1, metric='loss', **kwargs)[source]¶ Runs training on small samples of the dataset in order to overfit.

If you can’t overfit a small sample, you can’t model the data well.

This debugger tries to overfit on multple small samples of the data. The sample size and batch sizes are varied and the training is done for a fixed number of epochs.

This usually gives an insight on what to expect from the actual training.

Parameters: - trainer (magnet.training.Trainer) – The Trainer object

- data (magnet.data.Data) – The data object used for training

- batch_size (int) – The intended batch size

- epochs (float) – The expected epochs for convergence for 1% of the data.

Default:

1 - metric (str) – The metric to plot.

Default:

'loss'

Note

The maximum sample size is 1% of the size of the dataset.

Examples:

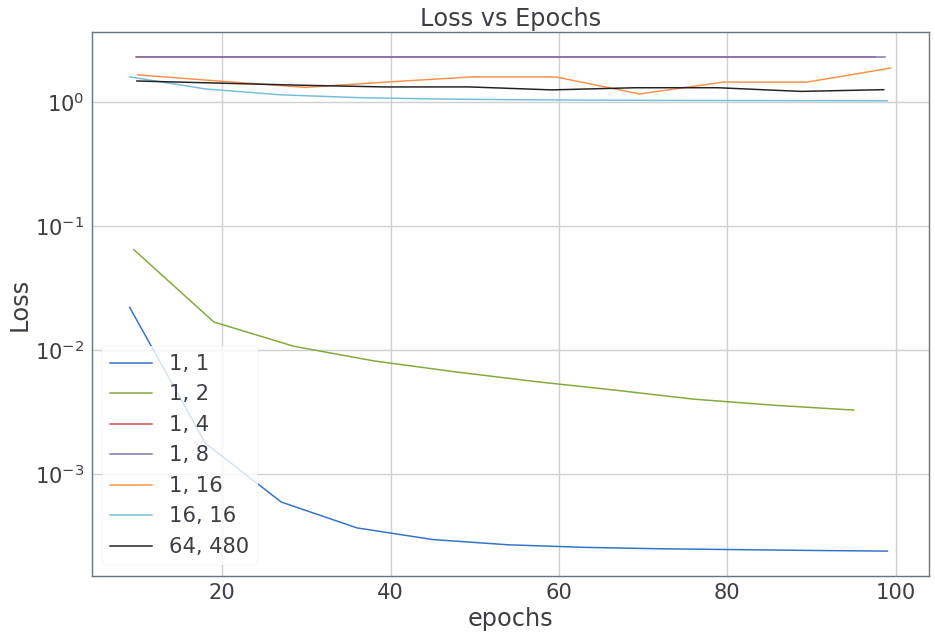

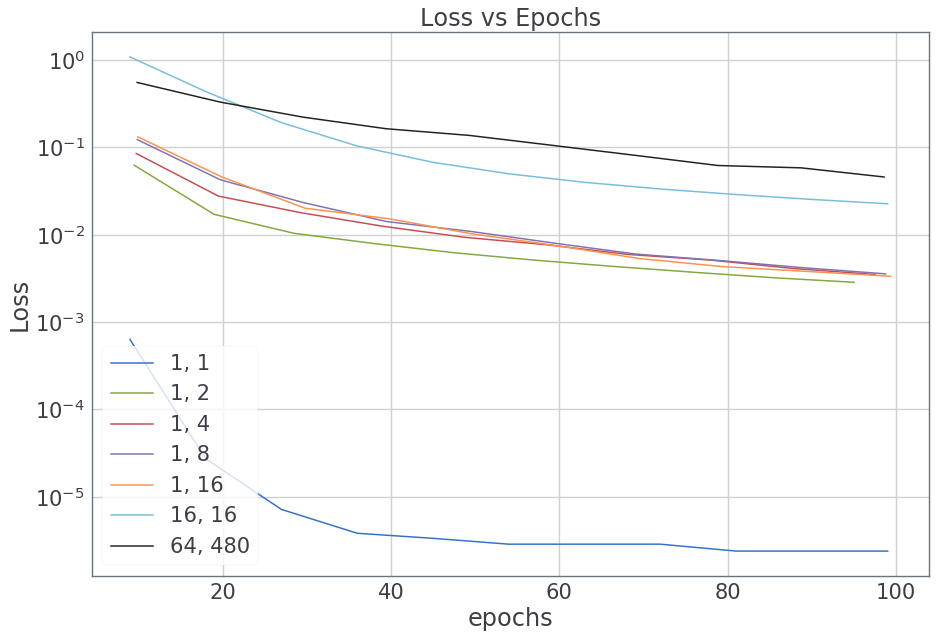

>>> import magnet as mag >>> import magnet.nodes as mn >>> import magnet.debug as mdb >>> from magnet.data import Data >>> from magnet.training import SupervisedTrainer >>> data = Data.get('mnist') >>> model = mn.Linear(10) >>> with mag.eval(model): model(next(data())[0]) >>> trainer = SupervisedTrainer(model) >>> mdb.overfit(trainer, data, batch_size=64)

>>> # Oops! Looks like there was something wrong. >>> # Loss does not considerable decrease for samples sizes >= 4. >>> # Of course, the activation was 'relu'. >>> model = mn.Linear(10, act=None) >>> with mag.eval(model): model(next(data())[0]) >>> trainer = SupervisedTrainer(model) >>> mdb.overfit(trainer, data, batch_size=64) >>> # Should be much better now.

-

magnet.debug.check_flow(trainer, data)[source]¶ Checks if any trainable parameter is not receiving gradients.

Super useful for large architectures that use the

detach()functionParameters: - trainer (magnet.trainer.Trainer) – The Trainer object

- data (magnet.data.Data) – The data object used for training

-

class

magnet.debug.Babysitter(frequency=10, **kwargs)[source]¶ A callback which monitors the mean relative gradients for all parameters.

Parameters: frequency (int) – Then number of times per epoch to monitor. Default: \(10\) Keyword Arguments: name (str) – Name of this callback. Default: 'babysitter'

-

magnet.debug.shape(debug=True)[source]¶ The shapes of every tensor is printed out if a module is called within this context manager.

Useful for debugging the flow of tensors through layers and finding the values of various hyperparameters.

Parameters: debug (bool or str) – If str, only the tensor with this name is tracked. IfTrue, all tensors are tracked. Else, nothing is tracked.